Earlier this month, a go-playing computer played against a top professional in one of the most notable such matches since AlphaGo vs Lee Sedol. This time it was Zen vs Cho Chikun. This match was quite different from the AlphaGo match, and those differences are very informative.

This post is by no measure complete, and there are many more lessons that can be drawn from the games. Unfortunately this match seems to have gotten much less press than the AlphaGo match, but I think it might even be more important on the path to moving forward.

History of the players

Cho Chikun is a Korean-born Japanese 9 dan professional who has been playing as a pro since 1968 and is one of the legends of the Japanese professional scene from when Japan was dominant. While he is still an active player, Korea and China have come up and mostly surpassed Japan in recent years. goratings.org has him ranked 164th in the world at the time of writing.

While Cho Chikun might be one of the most legendary human players, Zen is probably the most legendary computer player. For several years leading up to the unveiling of AlphaGo, Zen was the strongest computer player, fueled by Monte Carlo Tree Search, which itself had been one of the key breakthroughs that allowed computers to reach amateur dan levels.

Following the AlphaGo match, Zen's developer started incorporating neural networks in similar fashion. Within a few months, Zen broke through the 6 dan barrier that it had faced previously, eventually rising to 9 dan on KGS. Since Zen has played quite a bit online, it was much more of a known quantity compared to AlphaGo, but I believe the version that played Cho Chikun had some improvements that had not been seen beforehand.

Between Zen having lots of public games and the fundamental structure being similar to programs we had seen before, Cho Chikun was surely better prepared to face off against Zen than Lee Sedol was for AlphaGo. He must have known that they were playing an even game for a reason (prior to AlphaGo, most games with computers against top professionals were run with 4-5 handicap stones), and to be prepared for a tough fight.

Computational Power

It's hard to find a bigger contrast than that between the computational power used for AlphaGo and the power used for Zen. Zen was running on 2 CPUs and 4 GPUs, while AlphaGo was running on 1920 CPUs and 280 GPUs. Notably, Zen's hardware is even less than the "single machine" version of AlphaGo. This is just the hardware for the actual match, and the training hardware is likely significantly larger for both programs.

This difference shows the difference in priorities between the two groups. AlphaGo was created in order to push the cutting edge and demonstrate what can be done when you're willing to use lots of hardware. Lots of Google projects have that sort of feel. On the other hand, Zen is meant to be a program that can be sold commercially. Such a commercial program is worthless if it cannot be run on consumer-grade hardware.

The Results

In AlphaGo vs Lee Sedol, we saw that both sides seemed to be quite a bit more comfortable playing white, and that was reflected in the results, with white winning four out of the five games. That wasn't particularly surprising, as we had seen dominant winning percentages with white from Ke Jie in the year leading up to the match.

On the other hand, in the Zen vs Cho Chikun match, black won all three games. It's hard to say whether that is really a pattern or if it's just how the match went. But it's certainly a result that flies in the face of what we thought we knew from the AlphaGo games.

Blind optimism when behind in endgame

Just like AlphaGo, Zen "goes crazy" when it decides it has a near-insurmountable deficit. Rather than play normally and hope for a small error, it plays moves that are plainly bad and dig itself deeper into the hole.

Poor time management

Zen ended game 1 with 55 minutes of main time, game 2 with 52 minutes, and game 3 with 45 minutes. Meanwhile, Cho Chikun went into byoyomi in games 1 and 2, and had only 14 minutes left of main time in game 3 (which ended rather abruptly). There's a saying that if you still have main time at the end of the game then you didn't think enough. Both Zen and AlphaGo seem to be overly aggressive with their time keeping and keep too much time as a buffer.

Confident game evaluation

One striking difference between computers and humans is that computer programs will generally be written in such a way to be able to assign a numeric evaluation to a game state. Meanwhile, a human might be judging the position much more qualitatively, weighing different aspects of the position against each other, but not directly saying that one position is better than another.

This can be a great strength, because it allows the computer to navigate difficult situations and acquire small advantage after small advantage, as the human is unlikely to be a perfect judge of their options. On the other hand, if it is taken to the extreme, then it can turn into a weakness. We saw with AlphaGo that it would give away points in endgame if it judged itself ahead. None of the Zen games went into endgame with Zen leading, so we don't know if it follows the same pattern, but let's say it does.

If you're in the habit of giving concessions when you're ahead, you need to be very careful to ensure that you are actually ahead, and don't just think you're ahead. If you believe you're ahead, but the game is actually even, then a small loss could put you behind, even if you think you're still ahead.

For a human, there's a reasonably straightforward answer: account for potential error in your own evaluation. While you do see human players make concessions in order to simplify the game when they're ahead, these moves are also a sign of great confidence in their evaluation, and plenty of human professionals have made game losing mistakes in that kind of situation. None of the computer evaluations we've seen so far have given a measure of confidence. Overconfidence in their evaluation is a weakness that can result in losses in games where they believe they are ahead but the game is actually even.

During the Zen broadcasts, we did get to see its evaluation of the board state a few times. In the early middlegame of game 1, which was a win for Cho Chikun, Zen believed itself to be a 59-41 favorite. Even near the endgame, it rated itself as a 60-40 favorite, while the professional commentators were calling the game to be favoring Cho Chikun. It's always difficult to ensure that your measurements align with reality, but in some ways that's the most important part.

5-3 Openings

In game 1, Cho Chikun opened with dual 5-3 points, and in game 2, he played another 5-3 point. Opening moves other than 3-4 and 4-4 have a bit of an odd standing among human go players. While the 3-4 and 4-4 points are generally considered optimal for the first move in a corner, other moves such as 3-3, 5-3, and 5-4 are all considered playable in some situations.

However, because of that slight difference, I expect that AlphaGo, Zen, and other programs with similar structure will almost always play 3-4 and 4-4 points. I don't believe that Zen's developer has given details about how the program is trained, other than some indications that it is similar to AlphaGo. So if the training data is mostly games against itself, then the nonstandard openings are likely going to have less data than the standard ones, possibly by a large factor.

It's possible that such a lack of training data resulted in evaluations for these positions that doesn't quite match reality. Human amateurs underestimate the nonstandard openings all the time, and quickly find themselves in a bad position when they encounter a sharp corner sequence that they didn't see coming.

Semeai and Ladders

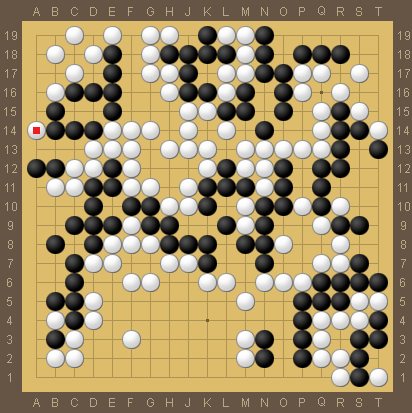

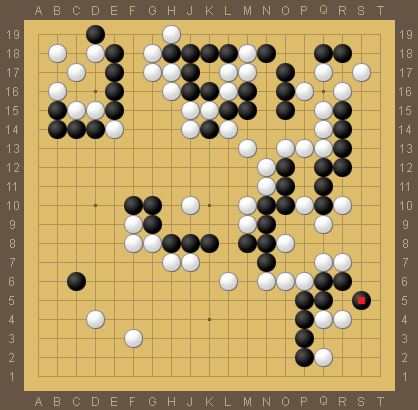

One particularly interesting position was the lower-right corner in game 1. Black (Cho Chikun) made a move that was meant to kill the corner, but actually allowed white to make a ko for life. Given that the black group surrounding the corner was almost surrounded itself, such a ko would be extremely risky. Here is the position that kicked it off.

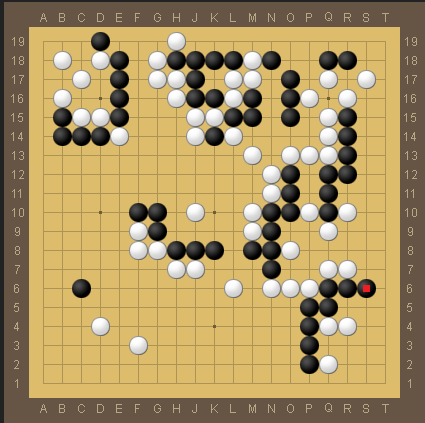

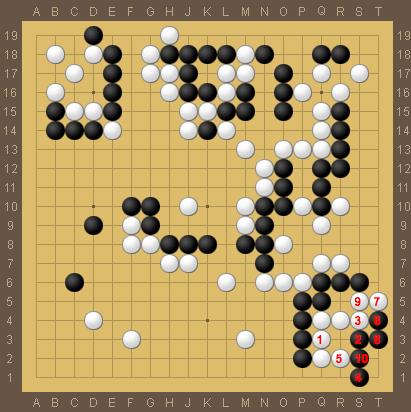

Here are the two sequences that lead to ko.

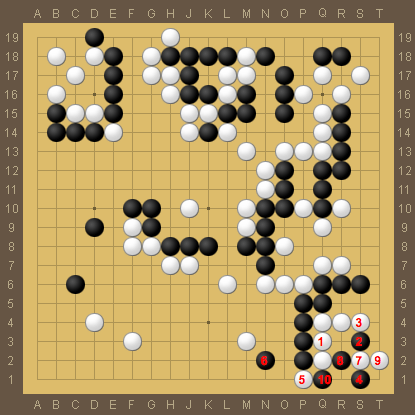

And finally, the proposed alternative that avoids ko entirely.

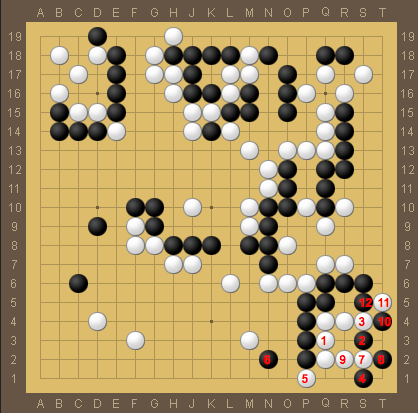

However, Zen chose to play completely differently, and instead ended up straightforwardly dying in the corner without a ko. Did Zen misread? Does it have an unreasonably aversion to ko? Perhaps it was unable to distinguish ko for life from dying because the living sequence is too long. Plus, some lines of the situation result in a semeai, which appears to be a common weakness in Monte Carlo Tree Search based programs.

Unfortunately, there are not many published details about the inner workings of Zen. In particular, I don't know what feature set is being used for the input to the neural network. AlphaGo used a notable feature layer that indicated whether a group of stones could be captured in a ladder. Ladders are rather infamous for fooling computer programs and leading to absurd sequences that even a relatively weak amateur would avoid, so it is possible that the DeepMind team added that layer as sort of a patch for that behavior.

It's undoubtably true that this kind of patch is the quickest way to a stronger computer player. Ladders are a very important concept in go and many joseki depend on particular ladders in one area or another. So ensuring that the neural network is getting the ladder correct is a clear benefit.

However, ladders can also be seen as a very simple type of semeai. You have a group that has only one liberty, but by running you can extend it to two, and then your opponent reduces it back to one. All of the stones your opponent is playing only have two liberties, so if you ever manage to create a third liberty then you sort of win the ladder. This situation is very similar to semeai, where one liberty can be the difference between your group dying and your opponent's group dying. The weaknesses to ladders and the weaknesses to semeai are probably related, so if someone finds a general solution that allows computers to read ladders, it seems quite likely that the same technique will solve the problems with semeai.

Overall, I think that Zen's match showed that state of the art computer go is nearing the level of top professionals, but still has some distance to cover. As great of a symbol AlphaGo's match was for the AI community, I am worried that AlphaGo's victory was overstated and people might be erroneously considering go to be a solved game. Go is still a good testing ground for computer and human competition for now, and will probably remain that way for several years.